The Four Dimensions of AI Scaling

Is AI model scaling hitting a wall? There is no wall. The path to AI scaling is not just up, but down, out, and across.

The Case of the Missing Big AI Foundation Models

Since 2023, we have noticed two trends: Smaller AI models have become much more efficient, performing as well as or even better than larger AI models from the previous generation. At the same time, bigger AI models have trouble improving performance significantly. These trends have gotten more pronounced in the last six months:

Last fall it was reported that OpenAI’s internal training runs for GPT-5 were falling short of expectations and running behind schedule. Early in 2025, we got similar news about Meta scrambling after DeepSeek’s R1 release Llama 4 training, as Meta recognized Llama 4 training was falling short.

OpenAI’s big non-reasoning AI model GPT-4.5 drew mixed reviews, and many users found it “underwhelming” in benchmarks and tasks, while OpenAI tried to sell the model for its ‘vibes’ and “emotional intelligence” instead of reasoning or benchmark performance.

Llama 4’s initial release in April was likewise underwhelming, and Meta even got a PR black eye for gaming benchmark results to make them look better than they were in real-world use.

While some large AI model releases have been disappointing, others never showed up at all:

Anthropic released Claude 3.5 Sonnet in June 2024 and Claude 3.5 Opus was promised for late 2024 as a follow up. It never arrived.

After Gemini 1.0 Opus, the largest AI model from Google since has been Gemini Pro, including the current SOTA Gemini 2.5 Pro.

Recent non-reasoning large AI model releases are underwhelming because they are not competitive compared to AI reasoning models on many complex tasks. AI reasoning models are simply better.

Yet AI models have improved remarkably in key features in the past year: multimodality, tool use, and reasoning. They have also gotten more efficient, with today’s small AI models outperforming last generation bigger AI models. More bang for the buck has come from innovations besides raw scaling of pre-training.

We’ve gone from “scale is all you need” to “you need something besides scaling.”

The Pre-training Slowdown

There are several challenges to scaling pre-training for AI models: Diminishing returns and limits on data quality and quantity hamper AI performance scaling. Meanwhile, AI developers are improving AI models in more fruitful ways, by improving efficiency of smaller AI models and training AI models to reason with RL.

Diminishing returns: The scaling laws posit that AI model performance gains are log-linear on the increase in data, parameters, and training compute in pre-training. Thus, performance gains diminish relative to required investments in training. More parameters make AI models slower and more expensive to run during inference, a poor trade-off.

Mixture-of-experts (MoE) models mitigate the inference cost since they only activate a fraction of their parameters. Llama 4 Maverick and Scout, DeepSeek V3 and R1, and two of the Qwen 3 models are MoE models.

Scaling Data: Scaling on data requires ever-increasing quantities of high-quality data, but ‘there is only one internet’ as Ilya Sutskever observed. AI model builders are using AI to refine and optimize datasets and creating synthetic data to feed AI models data for training. Qwen 3 made effective use of synthetic data to help their pre-training process.

Small is Beautiful

Smaller AI models, using distillation, pruning, RL for reasoning, and more efficient training, can punch way above their weight, with performance near state-of-the-art yet being much smaller and cheaper. In some cases, smaller AI models can even outdo big AI models on many real-world tasks.

The recently released Qwen 3 models exemplify this, with smaller Qwen 3 AI models distilled from larger Qwen 3 models and state-of-the-art for their size. The tiny Qwen 3-4B model is incredibly effective for its size, outperforming GPT-4o, Qwen 2.5 72B, and DeepSeek V3 on many math and code benchmarks.

Smaller AI models with better inference-time efficiency deliver near state-of-the-art AI model results at far lower cost. This drives greater adoption by deriving more AI value from available GPUs and saving money by providing more for less.

Scaling Reasoning

“Importantly, because this type of RL is new, we are still very early on the scaling curve: the amount being spent on the second, RL stage is small for all players. Spending $1 M instead of $0.1 M is enough to get huge gains. Companies are now working very quickly to scale up the second stage … but it’s crucial to understand that we’re at a unique ‘crossover point’ where there is a powerful new paradigm that is early on the scaling curve and therefore can make big gains quickly.” - Dario Amodei

The release of o1 and the rise of AI reasoning has opened up a new dimension of scaling AI model performance. This consists of two related techniques: Scaling AI reasoning with RL post-training; and test-time scaling, where models scale performance by using more thinking tokens during inference. Combined they give us AI reasoning models.

As Dario Amodei noted in his essay “On DeepSeek and Export Controls” we are still in the early days of scaling AI reasoning, because the amount of RL for reasoning being done is much smaller than pre-training (and its cost is much less). While the Qwen 3 models required 36 trillion tokens to do pre-training, the R1 post-training reasoning RL used under 1 million examples.

There are more gains from incrementally scaling post-training RL for reasoning more aggressively. This is perhaps how we got OpenAI’s o4-mini, perhaps the most efficient high-performance AI reasoning model yet.

Expect AI model releases in the near term to focus on scaling post-training RL for reasoning, at least until that scales up to become a significant cost relative to the cost of pre-training.

Scaling Down and Out

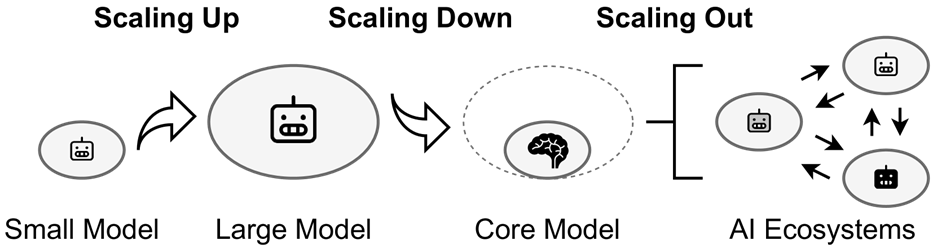

The paper AI Scaling: From Up to Down and Out examined the bottlenecks, limits and costs of traditional AI scaling, and proposed an alternative approach to improve AI systems.

The authors point out that “scaling down” AI models through pruning, distillation, LoRA, and quantization make for better core AI models – lightweight yet powerful. Building on these AI models, the authors envision “open and accessible AI ecosystems” based on “lightweight core models as flexible building blocks.” Building up this AI ecosystem is “scaling out.”

The authors state that “the future trajectory of AI scaling lies in Scaling Down and Scaling Out.”

When AI reasoning was developed from RL fine-tuning and test-time compute, this was recognized and hailed as a new dimension of scaling. Recognizing AI model improvements in efficiency and multi-agent systems as distinct types of scaling is valid and useful terminology, which recognizes the contribution of various methods of advancing AI.

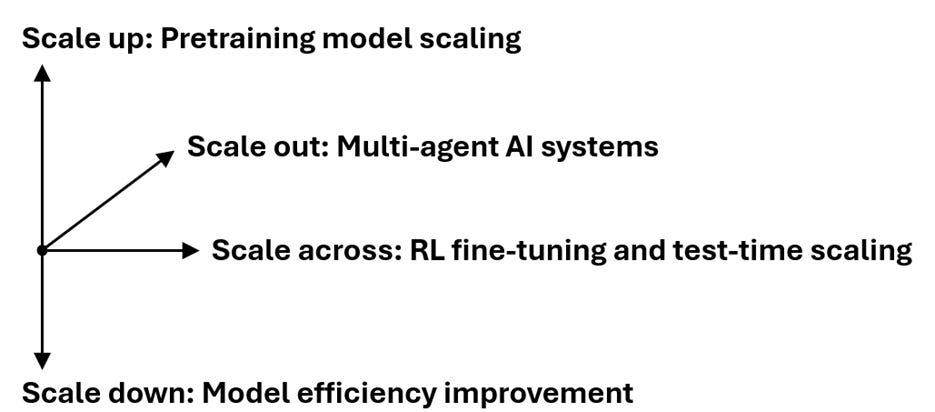

Putting it together gives us four dimensions of AI scaling: Scaling AI model pre-training is “scaling up.” Innovating in AI model efficiency is “scaling down.” Scaling RL fine-tuning and test-time compute for reasoning is “scaling across.” Building out multi-agent AI ecosystems based on multiple AI models is “scaling out.”

Society of Mind

This vision of many small AI models working together to build complex AI systems isn’t new. Marvin Minsky, a foundational figure in AI, proposed a compelling vision in the 1970s for how both human and AI minds work: “Society of Mind.”

Minsky theorized that the mind is a vast collection, or "society" of numerous simpler, specialized processes called "agents." In Minsky's vision, each agent in this Society of Mind is relatively simple and performs a specific, narrow task. Intelligence arises from how these agents communicate, compete, cooperate, and organize themselves. This concept evokes other concepts of complexity arising from simple components interacting, such as Wolfram’s Cellular Automata.

In this framework, truly intelligent AI is built by designing and assembling agents and mechanisms for their interaction, developing complex intelligence by organizing vast collections of specialized, cooperating agents.

Organizational AI and Agent Swarms

AI based on collaborative agents also sounds a lot like the multi-agent swarms, based on AI models that are far more complex than cellular automata or Minsky’s simple agents. These AI agent swarms would be built on AI foundation models capable of reasoning and tool calling, so they are able to interact in an agentic system.

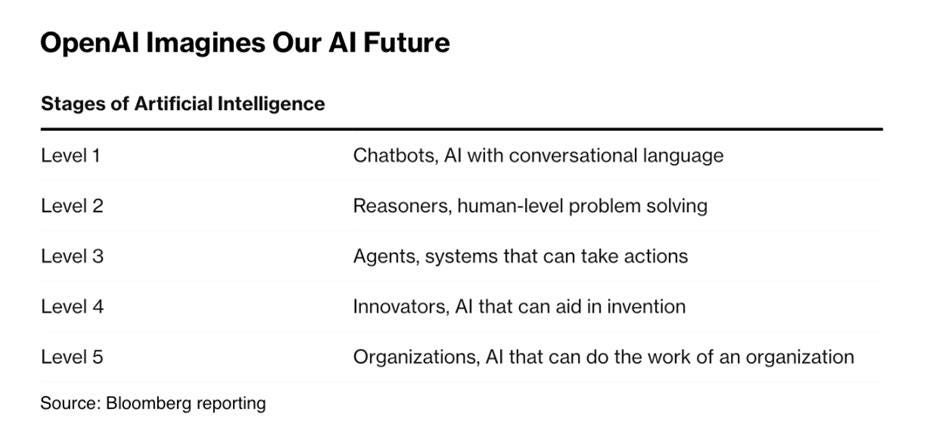

OpenAI’s long-term roadmap to AGI provides such a multi-agent AI system. Their roadmap envisions 5 levels of capabilities - AI chatbots, reasoning models, AI agents, AI innovator systems, culminating in a final level 5 – “Organizational AI” – that equates to AI capabilities of whole (human) organizations.

Organizational AI is ambitious in scope – these are superintelligent AI systems that organize and accomplish large complex tasks involving many sub-tasks and requiring many different skills. Comparing Organizational AI to current day AI is like comparing the brain-power of a whole corporation to that of an individual.

While multi-agent swarm AI systems already exist, they are far less complex than what OpenAI’s Level 5 envisions. Implementing Organizational AI requires harnessing and coordinating multiple AI agents into this complex system.

The Four Dimensions of Scaling

AI scaling is not dead. Rather, there has been a shift towards a multifaceted approach to AI development, from scaling AI models solely through scaling pre-training, into four dimensions of scaling - Up, Down, Across, and Out:

Dimension 1: Scaling Up – Pre-training Model Scaling

Scale compute while holding parameters steady to get the most performance out of every parameter.

Scale data using AI to curate and refine data for improved data quality; create synthetic data from AI to augment training data.

Use fine-grained mixture-of-experts (MoE) for greater inference efficiency.

Dimension 2: Scaling Down –Model Efficiency Improvement

Use distillation, pruning, quantization, and other techniques to further compress AI models to get the most capability in the least amount of parameters, leading to more cost-efficient AI models.

Dimension 3: Scaling Across – RL fine-tuning and Test-time Scaling for Reasoning

Scale RL fine-tuning in post-training to obtain gains in reasoning performance on the same base model.

Scaling across is also about improving the other capabilities of AI models, including performance in agentic tasks such as tool calling.

Dimension 4: Scaling Out – Multi-Agent AI Systems

Scale multi-agent AI systems by increasing the number, diversity and types of AI agents within the system, improving the capability of the overall AI system.

Conclusion

AI models are continuing to rapidly improve, achieving better performance and broader capabilities by scaling across multiple dimensions: scaling up, down, across, and out. If there is a wall, AI model developers are working around it.

AI model developers improve new AI models by scaling training where the most gains are made for the least added effort or cost. For now, the leverage is greatest by scaling down (with distillation) and across (with RL for reasoning). We can expect rapid scaling of RL for reasoning in 2025, since that is the lowest-hanging fruit for performance gains.

Scaling AI model pre-training will continue, but improvements in AI models will be driven by innovations in training efficiency rather than increasing model parameter count. Pretraining compute will scale far more than parameter counts. To optimize for inference efficiency, fine-grained MoE architectures will be adopted. AI will be used to synthesize data to improve data quality and quantity in training.

OpenAI’s vision of Organizational AI involves massively scaling out multi-agent AI systems. This requires an AI ecosystem to support such multi-agent AI systems, which is being developed with protocols (like MCP and A2A) and AI models built for multi-agent interaction. Over the next few years, we can expect the “scale out” development of increasing larger and more capable multi-agent AI systems.