AI Week In Review 24.05.18

GPT-4o, Google I/O: Veo, Imagen 3, Gemma 2, Gemini 1.5 Flash, Gemini 1.5 Pro 2M, Project Astra, AI Overviews in Search, ZeroGPU, Buzz dataset, MMLU-Pro benchmark, OpenAI Alignment team quits.

TL;DR - OpenAI’s GPT-4o and Google I/Os broad list of announcements show major advances in multi-modal AI. We can now use text, voice, and images interchangably in both Gemini 1.5 and GPT-4o frontier models.

AI Tech and Product Releases

This week’s biggest release is OpenAI’s GPT-4o, a major advance in multi-modal AI - o stands for Omni - with major leaps in integrated audio, image and text understanding, reasoning and generation. We covered Monday’s GPT-4o demo in “OpenAI Goes Omni: GPT-4o.” Highlights of GPT-4o:

A conversational voice interface with low latency and expressive audio generation that can express emotion and even sing, and users can interrupt. The demos used a “Her”-like voice interface.

It can understand different voices, so can transcribe a meeting of multiple people exactly, understand and respond to different users.

Video and image understanding; ability to explain and detect scenes.

GPT-4o is faster, cheaper, and available on free tier ChatGPT. This means all ChatGPT users are on GPT-4o, effectively obsoleting prior ChatGPT models.

We show more GPT-4o use cases and hidden gems in the ‘top tools’ section below.

Google I/0 mentioned AI 120 times and announced 100 new things. Our recap article “Google I/O '24: A Gaggle of AI Updates” shared details on the breadth of announcements, with some highlights being:

Gemini 1.5 Pro 1 million token context window available to all, with 2 million context available for preview.

Gemini 1.5 Flash, a fast, cost-efficient, fully multi-modal model.

Gemma 2 27B open model coming this summer, and multi-modal PaliGemma 3B.

Veo, their new AI video generation model producing 1080p output.

Imagen3, their upgraded AI image generation model.

Project Astra, Google’s approach to multi-modal AI agents, was demo’d, showing it understand and reason about its visual surroundings and converse in audio.

AI Overviews in Search, using Gemini to upgrade Search experience.

NotebookLM audio: They showed a demo of it acting like a podcasting AI tutor (similar to the GPT-4o tutoring demo).

Many additional AI features across Google applications.

Google DeepMind also released a new framework to assess the dangers of AI models. The Frontier Safety Framework is explained here as “examining how AI can cause harm and defines the minimum capabilities required to do so.”

Hugging Face is launching ZeroGPU, a shared infrastructure for indie and academic AI builders to run AI demos on Spaces, to give open source developers the freedom to pursue their work without the financial burden of compute costs. Hugging Face is committing $10M of free GPUs with the launch of ZeroGPU, to foster the open source AI ecosystem and help help developers compete with Big Tech firms in AI.

Alignment Labs AI just released Buzz, a large dataset for fune-tuning. It’s an instruction dataset with 31 million rows and a total of 85 million conversations in single- and multiturns. It comes in 3 configurations: Buzz (SFT), RLSTACK (RLHF), Select Stack (filtered SFT).

The MMLU benchmark is getting dated, so TIGER-Lab Introduces MMLU-Pro Dataset for LLM Benchmarking. To go from MMLU to MMLU-Pro, several improvements were made: Questions were added; multiple-choice answers increased from 4 to 10; problem difficulty was increased and more reasoning-focused problems added.

Top Tools & Hacks

GPT-4o is a huge AI model advance, because it has pushed the boundaries of multi-modal AI model capabilities, so it earns our Top Tool for this week. GPT-4o has a remarkable number of use cases and capabilities not revealed in the demo. Some examples:

Consistent character image generation, where you can input a character and ask to output an image based on a story around them. It’s good enough to make a comic strip or a whole storyboard.

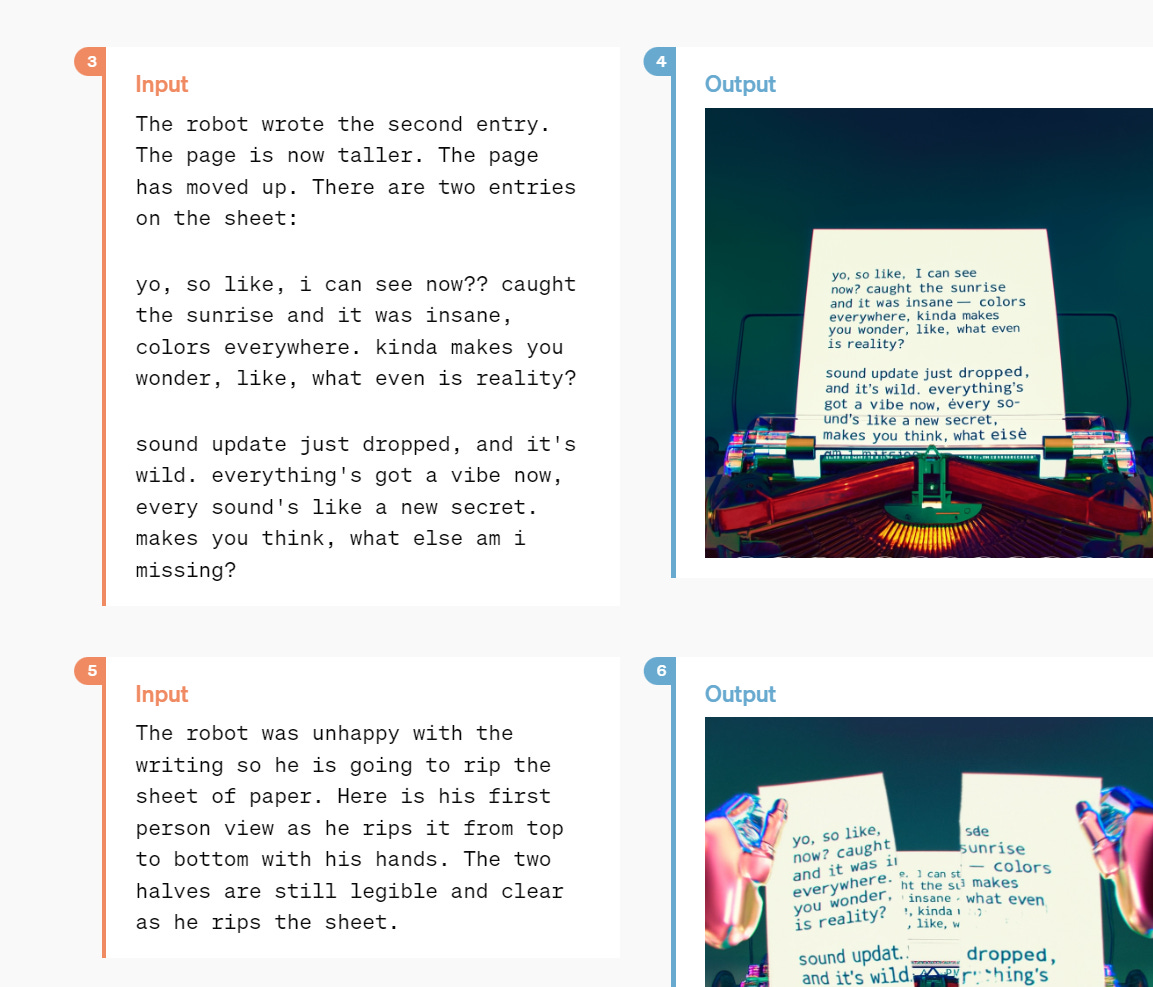

Text generation in the image output that goes far beyond any previous AI image generation (see Figure 1). This can do font generation, and output a whole poem in cursive script as an image (poetic typography). It can also turn any data source like a spreadsheet into a chart.

Video summarization is similar to what Gemini can do already, but making transcripts to separate individuals is a next-level feature. Movies-to-screenplays in a single prompt?

With image input, you can: Hand-write questions, math problems, diagrams, and get answers; do ‘sketch to website’; take a room photo and get design ideas.

Image output extends to 3D output.

There are many other use cases. Caveat, ChatGPT in “GPT-4o mode” is not running all the features. Features are rolling out in coming days, weeks, and months.

AI Research News

Our AI research highlights for this week included a paper on The Platonic Representation Hypothesis that suggests that AI models will converge as they grow bigger and get better.

Two papers on VLMs and multi-modals show how to get merged text and image inputs and outputs out of AI models:

Chameleon: Mixed-Modal Early-Fusion Foundation Models

Idefic2: What matters when building vision-language models?

Other papers show advances in LLM planning (ALPINE), 3D generation (CAT3D) and fine-tuning (Online RLHF):

ALPINE: Unveiling the Planning Capability of Autoregressive Learning in Language Models

CAT3D: Create Anything in 3D with Multi-View Diffusion Models

RLHF Workflow: From Reward Modeling to Online RLHF

AI Business and Policy

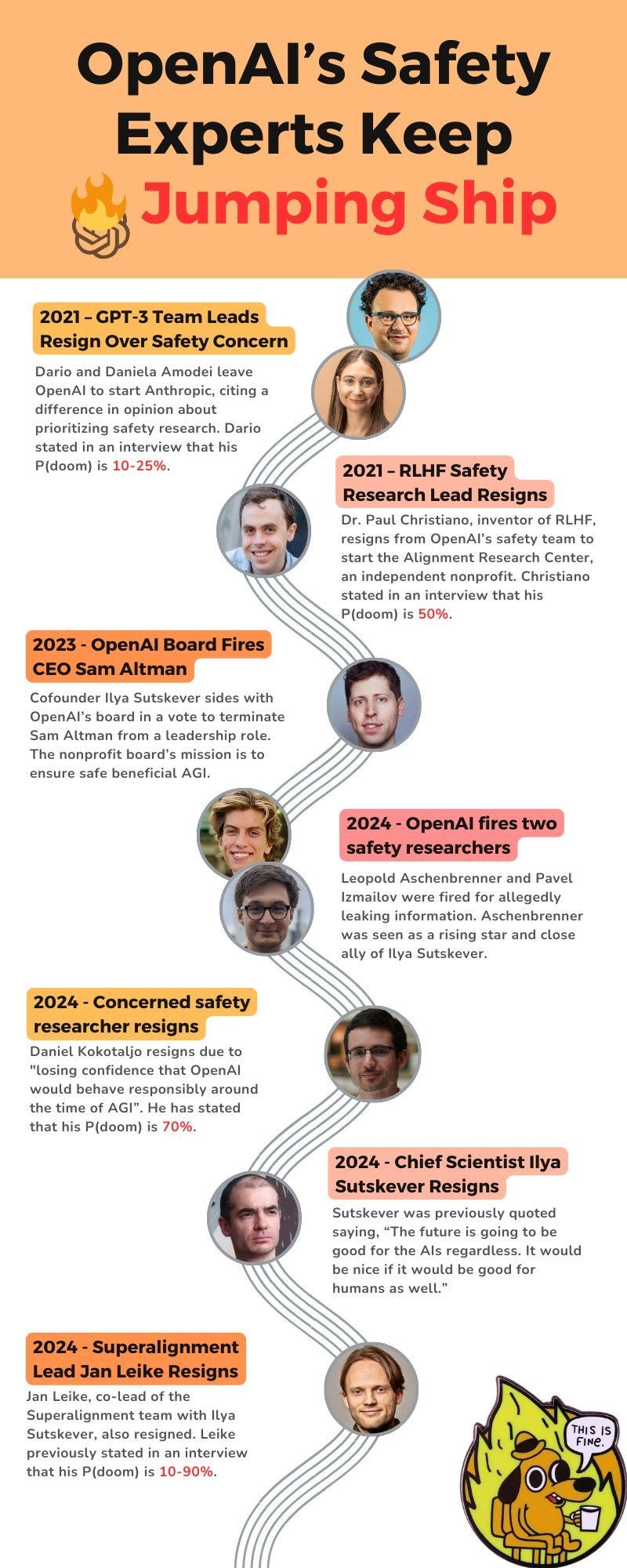

Ilya Sutskever announced he is leaving OpenAI, OpenAI’s superalignment lead Jan Leike also quit, and other safety team members resigned. What’s behind these moves? OpenAI created a team to control ‘superintelligent’ AI — then let it wither. As Leike put it “safety culture and processes have taken a backseat to shiny products.”

The exits drew enough concern for Greg Brockman to explain OpenAI’s safety strategy on X. However, it was vague and aspirational, with no commitments:

We know we can't imagine every possible future scenario. So we need to have a very tight feedback loop, rigorous testing, careful consideration at every step, world-class security, and harmony of safety and capabilities. We will keep doing safety research targeting different timescales. We are also continuing to collaborate with governments and many stakeholders on safety.

In other AI leadership news, former Instagram co-founder Mike Krieger joins Anthropic as Chief Product Officer.

Recall AI announced it has raised $10 million in funding. The Recall AI API pulls conversational data from Zoom and Google Meet to power AI bots.

xAI Nears $10 Billion Deal to Rent Oracle’s AI Servers. Elon Musk's AI startup xAI is negotiating with Oracle to rent cloud servers over a period of years for their AI development. xAI is also finalizing a $6 billion funding round.

The Economist claims that Big tech’s capex splurge may be irrationally exuberant.

In recent weeks four tech giants—Alphabet, Amazon, Meta and Microsoft—have pledged to spend close to a total of $200bn this year, mostly on data centres, chips and other gear for building, training and deploying generative-AI models. That is 45% more than last year’s blowout. Tech barons such as Meta’s Mark Zuckerberg admit that it may be years before this investment generates returns.

Two voice actors sue an AI company for creating clones of their voices without their permission.

The EU Commission wants to compel Microsoft to disclose gen AI risks on Bing, under the Digital Services Act, or face fines.

ISIS is using AI to generate fake news, with a new AI-generated media program called News Harvest. “It shows how artificial intelligence can be used to disseminate extremist propaganda quickly and cheaply.”

AI Opinions and Articles

AI eats the web. “Google's shift toward AI-generated search results, displacing the familiar list of links, is rewiring the internet — and could accelerate the decline of the 30+-year-old World Wide Web,” says Scott Rosenberg.

A developer who got AI to write his code for him asks: When AI helps you code, who owns the finished product? The answer is confusing. Specific AI-generated content cannot itself be copyrighted since it has not been created by a human being, but someone can copyright a compilation that includes such content.

What does that mean for source code, where one line may be human-written (and therefore protected) while the next line may be AI-generated (and unprotected)? "It's a mess," the attorney admitted.

It’s a mess indeed. Copyright for source code may lose its meaning and value in the era of AI, when its so easy to independently replicate code functions using AI. Software firms will either just go open source or find other ways to protect their IP.

We’ve run into this mess at ludicrous speed, blithely unaware that using these AI-powered coding tools turns the copyright protections every software firm takes for granted into a sort of Swiss cheese of loopholes, exceptions, and issues to eventually be tested in court cases.” - Mark Pesce

A Look Back …

The news about the OpenAI Safety team members leaving is a reminder that this has happened before. OpenAI safety team members, including Dario and Daniela Amodei, left in 2021 over differences in prioritizing AI safety; they started Anthropic soon after.

Dario Amodei explains why he left OpenAI:

There was a group of us within OpenAI, that in the wake of making GPT-2 and GPT-3, had a kind of very strong focus belief in two things. … One was the idea that if you pour more compute into these models, they'll get better and better and that there's almost no end to this. … The second was the idea that you needed something in addition to just scaling the models up, which is alignment or safety. You don't tell the models what their values are just by pouring more compute into them. And so there were a set of people who believed in those two ideas. We … started our own company with that idea in mind.