Plugging Into the AI Model Ecosystem

ChatGPT Plug-Ins, HuggingGPT, LangChain, and the controller-executor architecture

Cover Image is courtesy of Two Minute Papers.

Building The AI Model Ecosystem

We have written previously about how Foundation AI models needed truth-grounding, and there were several ways to make AI models more powerful, capable, accurate and reliable by going beyond the stand-alone single-prompt LLM response:

In “Truth-Grounding AI Models with Vector Databases,” we described how you can connect LLMs to relevant knowledge as context using vector databases and retrieval augmented generation.

In “Critique and Revise to Improve AI,” we described how LLMs can self-improve their results through reflection and iteration. LLMs do better when they do chain-of-thought reasoning step-by-step, or can critique, iterate and revise their initial answers.

In this article, we cover the most powerful way to add super-powers to the LLM: Give the AI model the ability to connect to software tools or other AI models, so it can calculate directly and solve more complex problems. We can use ChatGPT plugs-ins, HuggingGPT or LangChain to connect AI tools.

Bringing all three of the above capabilities together yields a power AI ecosystem even more powerful than GPT-4 on its own. A single invocation of the LLM can be thought of as a sub-routine call that takes text input and it yields text output. Chaining these LLM calls and responses together can solve a variety of complex tasks out-of-reach from an LLM alone. The LLM becomes a building block of a larger ecosystem.

LLMs That Can Use Tools

Two months ago, the ground-breaking Toolformer paper came out from Meta AI Research, showing how LLMs could “teach themselves to use external tools via simple APIs.”

Their language model was trained to decide “which APIs to call, when to call them, what arguments to pass, and how to best incorporate the results into future token prediction.” The tools they hooked up included a calculator, search engine, calendar, and a Q&A system.

Microsoft had by this time already introduced Bing Chat, that hooked GPT-4 up to the Bing search engine, to ground-truth questions asked of it.

ChatGPT Plug-Ins

Open-AI in March extended the power of Chat-GPT with plugins. They initially announced a number of plug-ins already available such as: Wolfram (math computations and curated knowledge), Expedia & Kayak (travel and flight reservations), OpenTable (restuarant reservations), Zapier (app integrations), instacart and other shopping integrations. More impressive is their plug-in integration with code interpreter, which can turn the LLM into an on-the-fly auto-executing AI:

We’re also hosting two plugins ourselves, a web browser and code interpreter. We’ve also open-sourced the code for a knowledge base retrieval plugin, to be self-hosted by any developer with information with which they’d like to augment ChatGPT.

The real power of ChatGPT plug-ins is opening up to enable many third-party plug-ins. While currently in a limited-alpha status, we may soon be in a world where practically any piece of software can be AI-enabled, or vice-versa, the AI is enabled with the capabilities of that connected software. Opening up this ecosystem is the “App Store moment for AI” that will bring many innovations.

The plug-in API documentation is further interesting because it changes how software APIs will work. We don’t to code the API directly, the AI model will read the documentation, figure it out, and build and write its own connector. Software APIs will be displaced by natural-language as an interface.

LangChain, the AI Model Connector

LangChain is an application framework built around LLMs that allows developers to create intelligent applications by ‘chaining’ AI model invocations together for advanced AI use cases. LangChain was designed with the idea that AI models needed to have these capabilities to be most powerful:

Be data-aware: connect a language model to other sources of data

Be agentic: allow a language model to interact with its environment

To that end, LangChain provides support in a plug-and-play manner for connecting models, prompts, memory, indexes, chains and agents. It’s written for Python and Javascript. LangChain provides a standard interface to access and integrate a variety of LLMs within applications.

Some examples of LangChain applications include autonomous agents, for example BabyAGI and AutoGPT, personal assistants, question answering bots, and chatbots. You can also use LangChain to embed AI models into other software applications, including web apps.

Innovation is being unleashed because of the capabilities of the framework that enables it. LangChain is a powerful framework that empowers developers to create intelligent applications in an open and flexible manner, and it will surely play a pivotal role in creation of many powerful AI applications going forward.

HuggingGPT aka Microsoft JARVIS: Controller-Executor Architecture

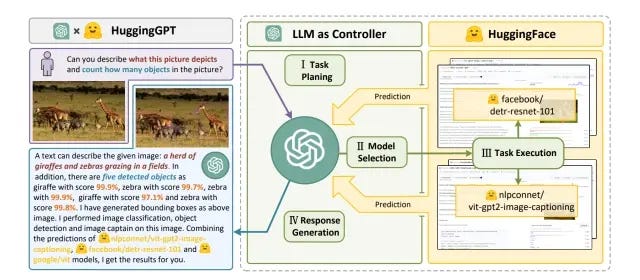

One leading example that really shows the way forward for how AI application can be architected for great power and flexibility is HuggingGPT, also known as Microsoft JARVIS. They built a collaborative system that consists of an LLM, specifically ChatGPT, as the controller and numerous expert models, specifically all the other models available on HuggingGPT, as collaborative executors.

Why the two names? JARVIS is the name given on Github for the codebase released by Microsoft Researchers, while HuggingGPT is the name given in the paper describing the work. In the abstract, they state:

we present HuggingGPT, a framework that leverages LLMs (e.g., ChatGPT) to connect various AI models in machine learning communities (e.g., Hugging Face) to solve AI tasks. Specifically, we use ChatGPT to conduct task planning when receiving a user request, select models according to their function descriptions available in Hugging Face, execute each subtask with the selected AI model, and summarize the response according to the execution results.

So the process flow is: ChatGPT analyzes the request and plans the task. After that, ChatGPT selects the correct model (hosted on Huggingface) to achieve the task. The selected model completes the task and returns the result to ChatGPT, which is returned to the user.

By leveraging the many available HuggingFace models, JARVIS/HuggingGPT can accomplish a broad range of sophisticated AI tasks in language, vision, speech, and across modalities. It connects to data sources and integrates various open-source LLMs for images, videos, audio, and more. A side-benefit of this approach is that the system can use the appropriate-sized model for a task. No need to use GPT-4 level power on text classification or translation, just use the simpler model.

This Beebom article explains how to use JARVIS / HuggingGPT. Like many of the Generative Agents, this is still so new it is a bit rough around the edges, but even as is, it can do many useful things. Things are moving quickly now, a hobbyist tool today is a professional tool tomorrow.

You can add multiple tasks or a multi-step task in a single query. For example, you can ask it to generate an image based on some text and then ask it to write poetry about it. Or, you can enter a URL from a website and ask questions about it. Not so sophisticated, but chain 30 website queries, tasks to summarize them, and you just created your “daily news summary” AI assistant. The power of chaining!

This is why I believe this Controller-Executor Architecture is the way.

The LLM Controller has enough intelligence to be instruction-following from natural language. Thanks to Toolformer we know it can be intelligent enough to learn how to select and interface with tools. The tools have abilities to solve extended specific problems. The controller architecture enables iteration, recursion, and multi-step problem solving.

Modular AI Systems and the AI Ecosystem

The benefits of modularization are described here across a number of technologies, from muskets to the Model T and container shipping: “Modularity is a critical driver of innovation, efficiency and cost decline. It is a core factor in making exponential technologies, well, exponential.”

Software in particular has benefited from modularization, through the use of APIs (Application Programming Interfaces). What we have described with ChatGPT Plug-Ins and LangChain is the API connectors for LLMs. APIs and with it modularization have arrived for LLMs.

I’ll go further: Modularization, containerization, and component-based AI architectures, where those components are Foundation AI models, is the next phase of AI advance towards AGI.

LLMs can be treated as subroutines, containerized, modularized, interfaced via a standard API - the prompt is the interface - so they can be chained together to do more powerful things. LLMs themselves are tools: language calculators, translators and interpreters; code whisperers, able to generate code. When the LLM can speak the language of code, that makes it adept at outputting potential code solutions to many problems; it can control other things beyond the written word.

Foundation AI models which have memory, ability to self-critique and revise, access to tools are quite powerful. Now add in planning and the recursion that can build on itself, which leads to Generative Agents:

Chaining, Recursion and Planning yields Autonomous Intelligence.

Foundational AI models will really have their power felt when we can iteratively, collectively and recursively run them as a collective intelligence. We can do that use architectures like the JARVIS/HuggingGPT one.

The image for this article is the scientist fox, which is appropriate in light of the recent paper describing a generative agent based on GPT-4 that went about doing chemistry analysis and experiments.

This is why I’ve said before that we don’t need to go beyond GPT-4 level LLMs to get to AGI. GPT-4-level LLMs as components, chained together and managed in a controller-executor-agent architecture, may be just good enough to change the world.

This is the way. I have spoken.