Situational Awareness 4: Power and Peril

The ASI techno-explosion will reshape global power. National security is at stake. Should we lock down AI and try to control it? Or is the future of AI open?

Situational Awareness - The Story So Far

The intelligence explosion and the immediate post-superintelligence period will be one of the most volatile, tense, dangerous, and wildest periods ever in human history.

- Leopold Aschenbrenner

Our previous three articles on Leopold Aschenbrenner’s Situational Awareness dealt with the race to AGI, AI automation and acceleration, and the potential blockers and limits on AI scaling and progress. This article deals with the risks and perils associated with coming AGI and ASI, also known as Superintelligence.

Aschenbrenner paints a scenario of AGI in the near-term feeding into AI progress acceleration that moves us quickly into Superintelligence:

The billion superintelligences would be able to compress the R&D effort humans researchers would have done in the next century into years. Imagine if the technological progress of the 20th century were compressed into less than a decade. We would have gone from flying being thought a mirage, to airplanes, to a man on the moon and ICBMs in a matter of years. This is what I expect the 2030s to look like across science and technology.

AGI and ASI: It’s not “If” but “When”

Aschenbrenner’s ‘fast takeoff’ scenario depends on several underlying assumptions: We’ll have the scale, the data, and the algorithms to deliver AI systems that can automate high-level thinking. We previously considered the question of what might block this scenario, but now let’s consider the other side: What if he’s right?

Or even if not quite right on exact timelines, what if he is right in the broad sequence? Our own review of blockers to progress concluded that “practical limits and blockers could slow AI development.” Slow, not stop. AI’s timeline is uncertain, but AI progress is not. It’s not a matter of if we get to AGI and ASI, but “when”.

What if AGI arrives, and then AI-enabled technology acceleration delivers ASI and the Superintelligence explosion soon there-after? We’ve predicted as much ourselves: AGI will happen soon. ASI will follow. The Singularity is inevitable.

Timelines of AGI within 5 years and the Superintelligence techno-explosion within the next 10 years are realistic. The future is closer than you think.

The Intelligence Explosion

The intelligence explosion and the immediate post-superintelligence period will be one of the most volatile, tense, dangerous, and wildest periods ever in human history.

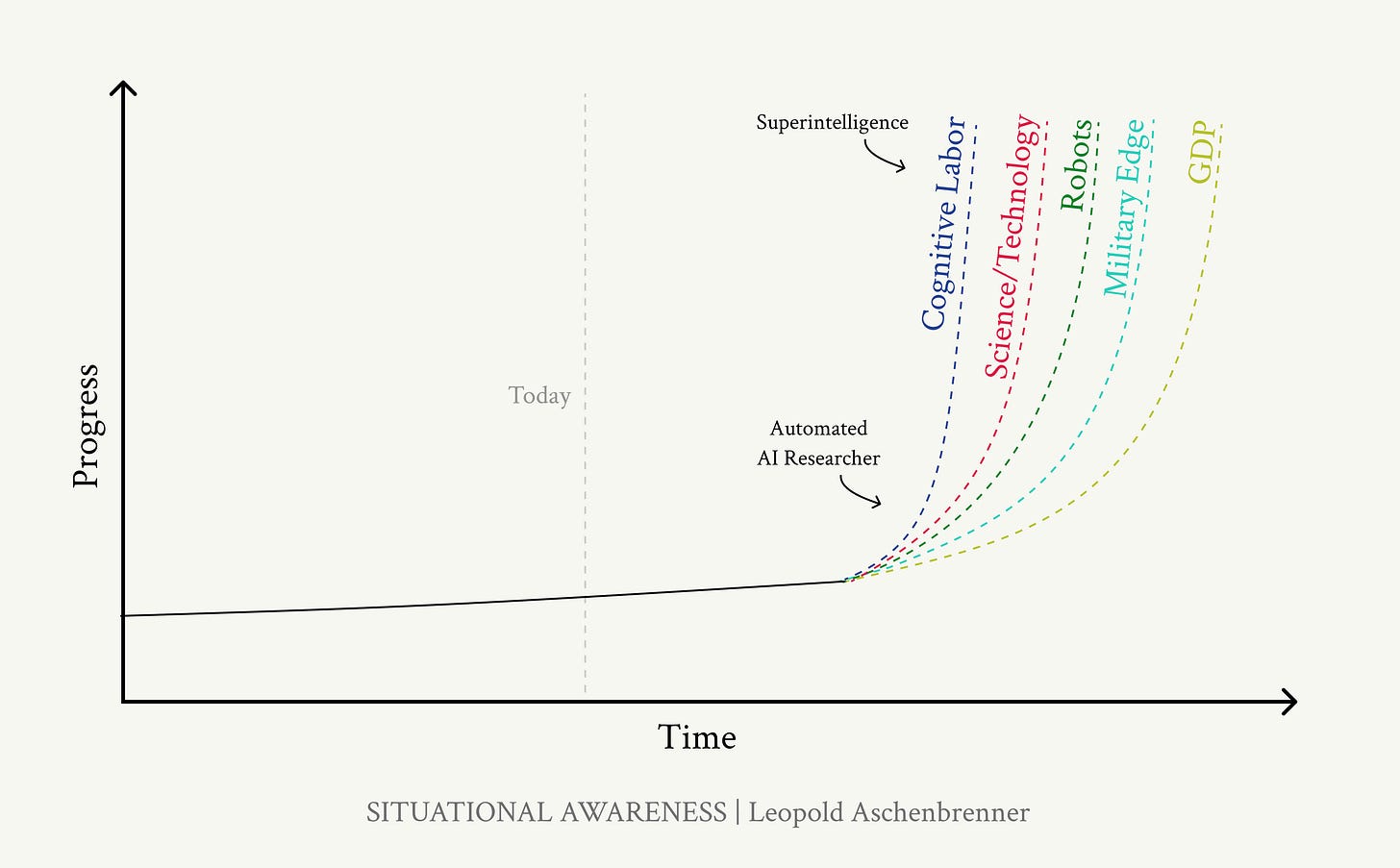

The primary after-effect of AGI-level automation and ASI will be massive acceleration in technology and science. This shockwave of progress will have cascading effects that super-charge economies and lead to tectonic power shifts. The power—and the peril—of superintelligence would be dramatic.

Aschenbrenner puts the accelerated science and technology trend this way:

In the intelligence explosion, explosive progress was initially only in the narrow domain of automated AI research. As we get superintelligence, and apply our billions of (now Superintelligent) agents to R&D across many fields, I expect explosive progress to broaden:

An AI capabilities explosion

Solve robotics

Dramatically accelerate scientific and technological progress

As AI can accelerate knowledge work, it will feed into a technology-science explosion cycle, with more powerful AI accelerating both AI advances and technology in general. Superintelligence will feed on itself. This acceleration, taken to the limit becomes The Singularity - science and technology races to infinity.

There are real limits to technology acceleration that ASI cannot overcome. We cannot accelerate human drug trials, physical experiments, or the building of new computer chips, vehicles, or materials. Many things will take a certain amount of time.

Just as the friction of air causes objects to reach a terminal velocity falling in air, the friction of physics, social habits, human adoption, legal and political constraints, and more will cause technology curves to hit a terminal velocity. We will approach but never hit the Singularity.

Aschenbrenner mentions three more major impacts and outcomes of the Superintelligence explosion:

An industrial and economic explosion.

Provide a decisive and overwhelming military advantage.

Be able to overthrow the US government.

Singularity or not, Superintelligence-enabled technology acceleration will yield bursts of innovation sufficient to upend industries and economies, shake societies, and reset the global order.

Economic Impact Waves

“An industrial and economic explosion”

Management firms and industry analysts are confirming and quantifying potential AI impacts. A June 2023 McKinsey report predicted that generative AI will unleash the next wave of productivity and “could add the equivalent of $2.6 trillion to $4.4 trillion annually.”

This value-add from AI cuts across many different industries, and about 75% of the potential value from generative AI use cases falls across four areas: Customer operations, marketing and sales, software engineering, and R&D.

This projection is over the next few years and based on near-term AI capabilities (current AI up to AGI, but not beyond). This is just the initial wave.

Beyond that is the equivalent of the next industrial revolution. We’ll have tens of trillions in AI value added over the next decade or two to our economy, as billions of robots, drones, virtual AI workers, and automated AI systems augment, enhance and ultimately displace human labor across almost all tasks, jobs and industries.

ASI’s Military Impact

“Provide a decisive and overwhelming military advantage.”

AI has already gone to war. Pre-AI precision weapons have already proven highly useful , obsoleted prior generations of weapons, and changed the course of war; for example, FPV drones saved Ukraine and stalled Russia’s 2022 invasion while Ukraine’s sea-borne drone-ships have decimated Russia’s black sea fleet.

As drones climb the ladder to full autonomy, remote-pilot drones will be replaced with AI-pilot drone technology. AI-piloted autonomous vehicles and weapons will be in land, sea and air.

Aschenbrenner goes beyond drones to mention “superhuman hacking”, “robo-armies,” “ inventions of new WMDs,” and “completely new paradigms we can’t yet begin to imagine.” He adds further that those possessing ASI will have an economic and production advantage that will multiple the ASI technology edge.

The Security Implications

The most serious risks with AI broadly, and AGI and Superintelligence specifically, fall into three risk categories:

AI Alignment: AI Alignment is following the values and intentions of human users. There is risk that AI might break alignment safeguards and becoming a self-perpetuating risk to humans.

AI Reliability: Reliability is operating as designed; unreliable AI creates risks and harm. It’s related but distinct from alignment: Consider AI in a self-driving car that makes a mistake and causes an accident, or AI that hallucinates a healthcare fact and misleads about a medical condition.

AI Security: The risk of AI and its power falling into the ‘wrong’ hands, where it is abused for nefarious ends. In this category are both the risks of criminals or random individuals mis-using AI at a low level, for mis-information or mischief. But it also the risks that state actors, either rogue regimes like Iran or Russia or our nearest-peer authoritarian rival China, end up acquiring AGI and then using it to our detriment.

I explore these three AI safety concerns in “AI Safety is a Technical Problem,” where I argue for treating AI Alignment and other safety issues as solvable technical problems and not ethical dilemmas (which they really are not).

Aschenbrenner deals with AI Alignment risk in his section “Superalignment,” where he expresses hope that “superalignment is a solvable technical problem,” as well as a concern that a fast take-off of ASI capabilities will leave little room for error, because ASI will be beyond our understanding.

AI Reliability is also solvable as technical problem, akin to how any engineered system is made reliable. We see some of those systems already: RAG improves factual reliability; prompt and output guardrails check and remove harmful content. Testing and robust controls will become more extensive over time.

However, AI Security is not just a technical problem and requires more than just technical solutions.

“Lock Down the Labs!”

Superintelligence (ASI) is a source of great power; it leads to technological advance, economic dominance, and military supremacy. A competing power (China), rogue nation (Iran), or other actors (terrorists, criminals) acquiring AGI or ASI may upset economic and military balance; it poses a national security risk that scales in importance as AI capabilities scale.

Right now, there is little risk that AI alone will tilt any power balances. However, Aschenbrenner compares Superintelligence to a weapon, comparing it to the nuclear bomb. It has a power far beyond what we can do today with AI, and has great military significance. It leads him to state:

AGI secrets are the United States’ most important national defense secrets

AI will become the #1 priority of every intelligence agency in the world

The algorithmic secrets we are developing, right now, are literally the nation’s most important national defense secrets—the secrets that will be at the foundation of the US and her allies’ economic and military predominance by the end of the decade … the secrets that will determine the future of the free world. - Leopold

What is done in AI labs today bears no relation to that level of seriousness. He quotes Marc Andreessen saying the AI Labs have “the security equivalent of Swiss cheese.”

Currently, labs are barely able to defend against script-kiddies, let alone have “North Korea-proof security,” let alone be ready to face the Chinese Ministry of State Security bringing its full force to bear.

High-tech companies have been penetrated and will continue to be if they don’t radically change practices. Achieving AGI internally requires a skilled team applying AI training algorithms, with the end result AI model weights. So Leopold sees these as the assets to worry most about:

There are two key assets we must protect: model weights (especially as we get close to AGI, but which takes years of preparation and practice to get right) and algorithmic secrets (starting yesterday).

Only a near-peer in AI technology and with sufficient compute and skilled AI researchers can make use of AI algorithmic secrets. Only China fits that description, but there are enough independent efforts by China AI teams themselves that suggest stealing our AI algorithms won’t matter as much as we might think. AI labs are developing better ideas independently.

Rogue actors could take advantage of model weights, but using AI at scale presumes technology infrastructure, and leveraging it requires technical competence. For example, a rogue nation might use AI for bio-weapons research, but even if AI discovered something, they would still need to develop and test it, using specialized equipment etc. Dangerous knowledge is just step one in exploiting a risk vector.

The bottom line is the nefarious use of AI by rogue actors is a real risk but likely to end up being low-level and not tipping scales of power dramatically.

We should take AI lab security more seriously and avoid leakage of AI weights and algorithms, both for national security and economic reasons. I agree with Aschenbrenner’s summation of the stakes:

Superintelligence is a matter of national security.

America must lead.

We need to not screw it up.

The Choice: Open vs Closed

Achenbrenner’s thought process is that since superintelligence is a matter of national security, he concludes it should be treated like a military secret and developed by a Governmental agency, in an effort akin to the Manhattan Project. His lengthy presentation on this in the section “The Project”.

However, we should recognize also that there is a path aside from the “only the paranoid survive” lock-down-the-labs and create a Manhattan Project for ASI.

His train of thought is both detailed and well thought-out, so I won’t engage in the details of what parts are credible and what parts I disagree with. Each step along his path seems cogent, but he ends in a place that could be more dangerous than the risks he tries to avoid.

One dangerous outcome is if control of AI is in the hands of a few; that gives great power over our most important technology. It’s a risk if that control is in China, in Google, at the CIA, or in the hands of a billionaire. The potential for societal control and a society of control is great and dangerous.

I might trust Google more than China, but the real divide is whether we have an open AI ecosystem or a closed one.

The closed AI world will have a few players racing in secret. USA won’t be alone; China won’t stop. However, because of the secrecy, Team USA will go slower than if AI research was open, not able to draw on ideas of others. Or they might sit on AI technology because they are comfortable with the status quo, like Google was until ChatGPT disrupted them.

The open AI world will see no one AI model ‘to rule them all’ but a plethora of AI options driven by dozens of competing AI companies working to advance AI technology for their own advantage. When nobody has a monopoly on AI, nobody can abuse AI technology for control. Freedom and choice is the anti-dote to a world of AI controls.

The good news is America is suited to lead in an open AI world: We are an open society with a largely free economy. For the same reasons we are not that good at keeping secrets, we are very good at innovation, competition, and leap-frogging, i.e., taking ideas from others to make even better ideas and products.

AI’s Open Alternative

Aside from it being good for USA is this stunning fact: We are in the open AI world, and there’s no going back.

Leading companies, specifically Meta, are committed to open source AI and keeping leading AI open. Open source AI has succeeded so well, it appears to be closing the gap with closed AI models (see Figure .

The competition in AI is thick and fierce and multiple cloud providers - Microsoft, AWS/Amazon, and Google - depend on and benefit from a large and rich ecosystem of AI models. They will be incentivized to have a diversity of AI models.

AI companies in other nations, like France’s Mistral, Japan’s Sakana, China’s tech companies such as Alibaba and Baidu, will make sure the US has no real monopoly on AI.

The importance of Meta is keep AI open cannot be understated. For Llama 3.1, not only did Meta release open model weights to a GPT-4-level AI model, but they allowed distillation of other AI models from them, and they shared their algorithmic secrets of how they built Llama 3.1. The Llama models ignited an open-source AI movement that uses fine-tuning and adaptation, and the learning from that is making

With all these players in this race, it’s highly unlikely that any one organization or even one nation will be able to fully control AI, and that’s a good thing. It’s also unlikely we will see “one AI model to rule them all,” but rather a rich diversity of AI models and AI systems that serve different purposes and tasks.

Being open in AI does have its problems. For example, AI technology leaks more easily to rogue actors; abuses will occur. An open AI world means fast-followers, and so China may find it easier to keep close to US AI companies; this is also true in reverse, as US firms can learn from China’s AI innovations.

An open AI world doesn’t obviate the usefulness of a US Government AI project. It may even underscore it, in that we might find it helpful to have an open AI model for the US Government so our own Government doesn’t depend on private entities for its AI. In a similar way, other nations might pursue variations on ‘sovereign AI’.

We won’t be alone in any AI effort, and thus secrecy won’t stop others.

Conclusion

Humility is in order when making projections and predictions about something that is in the process of going exponential. However, the basic points stand:

We will get to AGI and beyond that to ASI in coming years, likely achieving Superintelligence in the next 5 to 10 years.

This will accelerate technology, disrupt our economy but also add to productivity and prosperity; and re-shape global power dynamics. The stakes of Superintelligence will be high.

We will be tempted by the risks of AI to over-regulate AI; the security concerns of AI falling into the wrong hands will lead some to want to lock AI down. It won’t work.

The best path forward for beneficial development of AI to AGI and beyond and America’s successful dominance in AI is to lean in on our traditions of freedom: Welcome free-market competition in safe and responsible AI models and apps; embrace open source AI and pursuit of open AI models; make sure that AI ends up in the hands of all and not controlled by the few.

I’ll close by agreeing with Mark Zuckerberg, when he recently said:

“I believe that open source is necessary for a positive AI future. AI has more potential than any other modern technology to increase human productivity, creativity, and quality of life – and to accelerate economic growth while unlocking progress in medical and scientific research. Open source will ensure that more people around the world have access to the benefits and opportunities of AI, that power isn’t concentrated in the hands of a small number of companies, and that the technology can be deployed more evenly and safely across society.” Mark Zuckerberg