Gemini 2.5 Pro & DeepSeek V3 - Best Models Yet

Google’s new Gemini 2.5 Pro tops chatbot arena and is the new coding GOAT. The new DeepSeek V3 0324 also matches SOTA models. Both are “our most advanced AI model to date.”

Introduction

The AI race isn’t taking a break. This week, Google released a new best-model-yet, Gemini 2.5 Pro.

Since the start of the year, we have gotten multiple AI model progress surprises: DeepSeek’s R1 came out of nowhere to amaze AI consumers but also shock the US stock market. Then OpenAI stunned with o3-mini’s SOTA AI reasoning. Soon after, xAI upstaged with their best-model-ever Grok 3, then Anthropic showed their best model yet with Claude 3.7 Sonnet. Open-AI’s GPT-4.5 felt underwhelming despite being SOTA, given all that progress. Meanwhile, we got small, efficient AI reasoning models like QwQ-32B.

Less than 2 months after giving us Gemini 2.0 Pro and Flash 2.0 Thinking, Google decided to tease Gemini 2.5 Pro experimental. Since Google iterates fast on incremental releases of new features and models, a model update less than 2 months after they released Gemini 2.0 Pro is not a surprise. What is surprising is how good Gemini 2.5 Pro is.

Gemini 2.5 Pro - Technical Capabilities

Our first 2.5 model, Gemini 2.5 Pro Experimental, leads common benchmarks by meaningful margins and showcases strong reasoning and code capabilities. - Google

Gemini 2.5 Pro was trained to be an AI reasoning model through more RL training, and it also features the same native multimodality across text, audio, images, and video we have gotten from all recent Gemini models. This makes Gemini 2.5 a great all-around AI model that is highly intelligent, demonstrating robust performance across math, coding, knowledge-intensive tasks, and visual understanding.

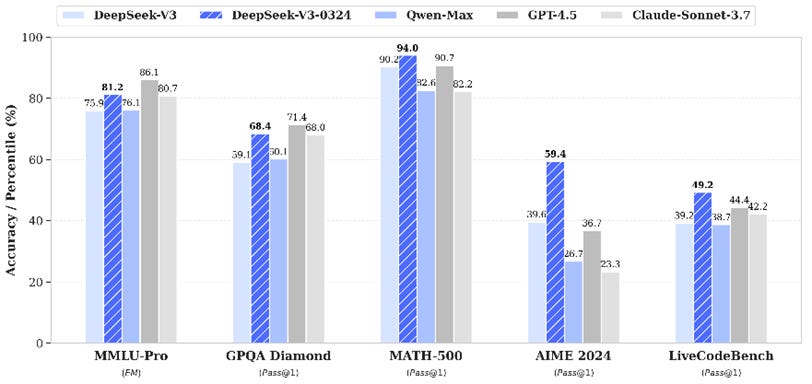

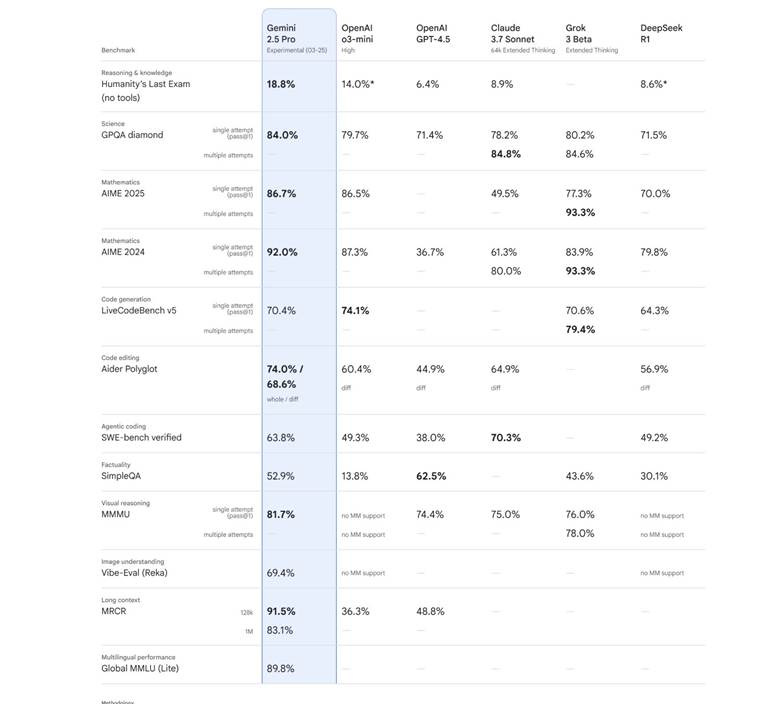

Gemini 2.5 Pro exhibits state-of-the-art performance across a range of benchmarks:

It tops the LMArena leaderboard at 1440, 40 points above former leader Grok 3.

It leads in mathematics (92% on AIME 2024) and science (84% on GPQA diamond) benchmarks, without employing cost-increasing test-time techniques.

It scores a SOTA 18.8% on Humanity's Last Exam, the new benchmark that evaluates the frontiers of human knowledge.

It leads on visual understanding benchmarks, scoring 81.7% on MMMU.

Coding is another strength, with Gemini 2.5 Pro scoring 70% on LiveCodeBench, and achieving a SOTA 74% on Aider polyglot.

Gemini 2.5 Pro supports an extended context window of 1 million tokens, and scores highly on understanding across such a large context (83.1% on 1M context MRCR). This means it can process vast amounts of information, responding with consistent, relevant, and useful outputs, for tasks based on lengthy documents, large codebases, or complex projects.

Gemini 2.5 Pro was Trained to Reason

Now, with Gemini 2.5, we've achieved a new level of performance by combining a significantly enhanced base model with improved post-training. Going forward, we’re building these thinking capabilities directly into all of our models, so they can handle more complex problems and support even more capable, context-aware agents.

Gemini 2.5 Pro has been trained with additional post-training to be a “thinking model,” using similar RL-techniques used to make Gemini 2.0 Flash Thinking. Perhaps Gemini 2.5 Pro was created from Gemini 2.0 Pro by performing additional post-training for reasoning.

This enables Gemini 2.5 Pro to reason through its responses before generating final answers, resulting in improved accuracy and ability to solve intricate problems in areas such as coding, science, and mathematics with greater proficiency. This is why, for example, Gemini 2.5 Pro does better than even o3-mini high on math related benchmarks such as AIME 2024.

Gemini 2.5 Pro is a Coding Gem

Gemini 2.5 Pro excels in creating visually compelling web applications and agentic code applications, as well as code transformation and editing. Gemini 2.5 Pro achieves impressive scores on SWE-Bench Verified, Aider Polyglot, and LiveCodeBench v5, showing its strength in various software engineering tasks.

Google themselves showed off how you can use Gemini 2.4 Pro to build web browser apps from a single prompt, showing examples of a Mandelbrot explorer and an arcade-style Dino game. It’s definitely a significant improvement over its predecessor, Gemini 2.0.

Beyond the benchmarks and demos, many users and AI influencers have been extremely impressed with Gemini 2.5 Pro’s coding capabilities. For example:

Rob Shocks was able to connect Gemini Pro 2.5 with Cursor AI to build a Notion clone, creating a full app without writing any code.

Matt Berman got several great coding results, including an incredible Rubik’s cube visualization that got to a 10x10 cube and that moved, rotated, and persisted colors.

DeepSeek V3 0324

Google wasn’t alone this week. DeepSeek released an updated V3 model called V3 0324, showing improvements that make the base updated V3 model more competitive with GPT-4.5 and Claude-3.7 Sonnet, in particular in mathematics and coding.

DeepSeek V3.1 features a transformer-based architecture with mixture-of-experts, the same as V3, with 671 billion parameters but only activating 37 billion per token, making it computationally efficient. This has enabled DeepSeek to offer the model at low API cost while still making money hosting the AI model.

Most importantly, this is an open model available on HuggingFace under MIT open-source license. This makes it the highest-performing open-source AI model available right now.

DeepSeek V3 0324 doesn’t compete with AI reasoning models, for example, the Gemini 2.5 Pro model performs better on math, coding and GPQA benchmarks thanks to AI reasoning. However, DeepSeek will build on this improved V3 base model to make their improved R2 AI reasoning model. The R2 reasoning model might be released as soon as April.

Conclusion – Advantage Google

Gemini 2.5 Pro is an excellent all-around AI model with some great AI coding capabilities. You can enjoy this new best-model-ever Gemini 2.5 Pro on AI Studio for free, and as a coding AI model, you can connect it with AI coding assistants like Roo or Cline. For now, it’s all free.

Theo was so impressed with Gemini 2.5 Pro, his reaction was that “Google won.” Here’s why he might be right.

Google’s flywheel of progress: After being behind OpenAI and slow to respond, since Gemini 1.0 release in late 2023, Google has built a great flywheel of AI model innovation, where they rapidly iterate on releases. Just last week, Google announced the Gemini Canvas feature; the week before was Gemma 3 models and native image generation in Gemini 2.0 Flash, better Deep Research, and Gemini personalization.

Gemini 2.5 Pro is not just the best Google AI model release yet. It’s a sign Google is improving at a fast clip and will continue to rapidly progress.

AI model commoditization: Microsoft's CEO believes that AI models are becoming commoditized, and the action is the layer above. He may be right. The advantage of any AI model seems fleeting, and performance is becoming more uniform across AI models as competitors copy what works.

However, don’t imagine AI models will be commoditized like raw materials or common industrial parts. AI models will become commoditized like semiconductors. Chips such as Intel microprocessors or Nvidia GPU are extremely complex, and the technology stack used to design, build and distribute semiconductor chips is capital-intensive and technically difficult. This sounds like the capital-intensive and technically challenging AI model business.

The chip business is competitive yet so challenging that only a few players can compete in each market segment. If one player dominates (like Nvidia does now in GPUs) they can extract high margins, but if not, margins falls and prices are based on marginal costs.

Similarly, while OpenAI has the only state-of-the-art AI model, they can charge what they want for it. But when competitors are matching each other in AI model performance, pricing matters.

AI marginal cost advantage: We are seeing a trend that may make things difficult for OpenAI and Anthropic longer term. If you look at the price per token for these AI models, OpenAI and Anthropic have been charging more than Google and DeepSeek for the same level of performance. On an API call basis, DeepSeek-R1 is 20 times cheaper than o1 reasoning model; Gemini 2.0 Flash is 50 times cheaper than GPT-4o, never mind the crazy pricing on GPT-4.5.

Google, perhaps due to cloud compute and self-made TPUs, and DeepSeek, due to pursuing inference-cost-effective fine-grained MoE models, seem to have a cost advantage.

The bottom-line is that Google’s fast iteration pace, technical depth, corporate deep pockets, and potential cost advantages combined to put Google in an advantageous position to win the AI model race long-term.